Apple Advances AI on iPhones with Innovative Flash Memory Use for Enhanced LLM Performance. Apple is reportedly making significant strides in the field of AI and machine learning, with a particular focus on enhancing the capabilities of large language models (LLMs) on devices with constrained memory like iPhones. This development could lead to the introduction of Apple GPT, a groundbreaking AI model, sooner than anticipated.

Apple Watch X 2024 to Introduce New Band Attachment System, Shifting Away from Current Compatibility

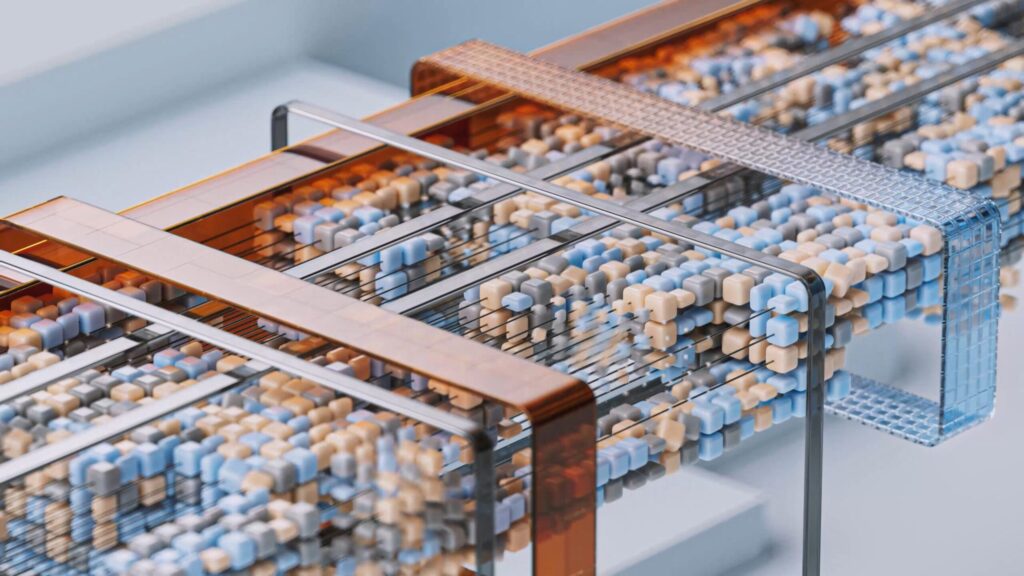

Apple’s AI researchers have identified a novel approach to deploying LLMs on mobile devices. Traditional challenges have centered around the significant data and memory requirements of LLMs, such as those used in ChatGPT. However, Apple’s team has innovated a technique utilizing flash memory, commonly used for storing apps and photos, to house the AI model’s data.

In their research paper titled “LLM in Flash: Efficient Inference of Large Language Models with Limited Memory,” Apple’s researchers highlight the abundance of flash memory in mobile devices compared to RAM, which is typically used for running LLMs. Their method overcomes the limitations of RAM by employing two key techniques to optimize data transfer and maximize flash memory throughput.

The first technique, Windowing, involves the AI model reusing some of the data it has already processed, rather than constantly loading new data. This approach significantly reduces the need for frequent memory fetches, thereby enhancing the speed and efficiency of the process.

The second technique, row-column grouping, is akin to reading larger sections of a book rather than individual words. This more efficient grouping allows data to be read from flash memory more rapidly, thereby accelerating the AI’s language comprehension and generation capabilities.

According to Apple’s researchers, the integration of these methods results in a substantial increase in processing speed — up to 4-5 times faster on standard CPUs and 20-25 times quicker on GPUs.

This breakthrough in AI processing efficiency has the potential to revolutionize future iPhone models. It could lead to more sophisticated Siri capabilities, real-time language translation, and enhanced AI-driven features in photography and augmented reality applications.

Apple’s efforts in generative AI, including the development of its proprietary model known as “Ajax,” could eventually be integrated into Siri. Ajax, reportedly operating on 200 billion parameters, would rival OpenAI’s GPT-3 and GPT-4 models in complexity and language understanding capabilities.

Known internally as Apple GPT, Ajax represents a significant step towards unifying machine learning development across Apple’s ecosystem. This move indicates a broader strategy to integrate AI more deeply into Apple’s range of products and services, enhancing user experience and technological capabilities.

In conclusion, Apple’s foray into advanced AI models and the development of efficient techniques for deploying these on devices with limited memory is set to bring transformative changes to its product line. With the potential integration of Apple GPT, the tech giant is positioning itself at the forefront of AI innovation in the consumer electronics industry.